Apache Kafka is an open-source, distributed event-streaming platform, or message queuing system. Kafka provides real-time data analysis that runs on servers and clients, either locally or in the cloud, on Linux, Windows, or Mac platforms. Kafka’s messages are persisted on disk and replicated within the cluster to prevent data loss.

Some typical Kafka use cases are stream processing, log aggregation, data ingestion to Spark or Hadoop, error recovery, etc.

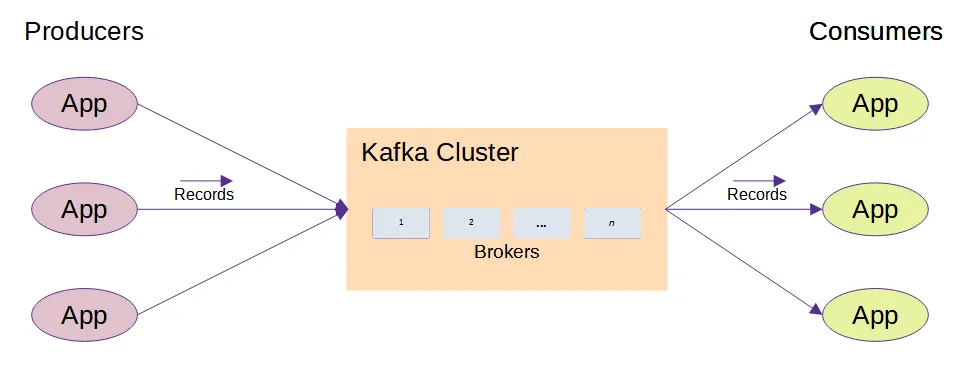

Basic Architecture

There are four main components:

- The Producer — The client apps that write their Events, or Topics, to the Kafka queue

- The Topic — Topics are the Events that Kafka stores. They are multi-producer, multi-subscriber (Consumer), decoupled, and can have any number of subscribers or none at all

- The Broker — Each Broker is a Kafka server that organizes and sequentially stores incoming Events by Topic and stores them on disk in Segmented Partitions

- Consumer — The apps that subscribe to Kafka Topics

A Kafka cluster is made of one or more servers, called Brokers. Topics live in one or more Partitions on one or more Brokers.

As Producers write events to the Topic queues, the Brokers store the message in Segments within their Partitions according to Topic ID. Kafka always writes Event messages into any Partition configured for that Topic ID, on any Broker. Because the save is spread across all Brokers that service that Topic ID and the data is written non-sequentially into Segments within those Partitions, there is no single Broker or Partition that contains the full, sequential list of Events for that Topic. Each Partition only holds a subset of Event records in its Segments.

Kafka Producers

Producers are client applications writing Topics to the Kafka Cluster.

Kafka Brokers

Brokers receive event streams from Producers and store them sequentially by Topic ID in one or more Partitions across one or more Brokers. Each Broker can handle many Partitions in its storage. All received messages are stored with an Offset ID.

For example, when receiving three events on a given Broker having three partitions, the Broker could store those Events to Partitions in this order 2, 1, and 3, while another Broker in the cluster could store them to 3, 2, and1. Because the writes to Partitions within Brokers are ad hoc, the individual Segments in any one Partition do not contain a sequential string of events. However, on retrieval, Kafka provides those records in their correct order by using their Broker-assigned Offset ID.

Additionally, you can configure the Event retention as suitable for the application.

The Topic

Kafka organizes events by Topic and may store a Topic in multiple Partitions on multiple Brokers. This provides reliability and also enhances performance by avoiding the I/O bottlenecks that using a single Broker might entail, by spreading the store action across multiple computers.Topics are assigned Topic IDs.

Kafka Consumers

Consumers are apps that read Topic information from Kafka queues. Consumers automatically retrieve new messages as they arrive in the queue.

Benefits

- I/O Performance — Non-sequentially writing Event records to multiple Brokers/Partitions avoids I/O bottlenecks that could occur if they were written sequentially into a single Partition.

- Scalability — Kafka scales horizontally by increasing the number of Brokers in the cluster.

- Data Redundancy — You can configure Kafka to write each event to multiple brokers.

- High-Concurrency, low-latency, high-throughput

- Fault-Tolerant

- Message Broker Capabilities

- Batch Handling Capability (providing ETL-like functionality)

- Persistent by default

Advantages Of Apache Kafka

Real-time data analysis provides faster insights into your data allowing faster response times. For example, to make predictions about what should be stocked, promoted, or pulled from the shelves, based on the most up-to-date information possible.

Even on very large systems, Kafka operates very quickly. You can stream all data in real time to make decisions based on current information, rather than waiting until the data has been obtained, aggregated, and analyzed, which is the case for many companies with large datasets.

Kafka is written in Java, so it is easier to learn.

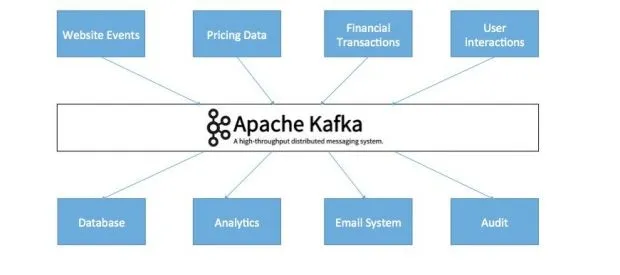

Use Cases For Kafka

Kafka is used for:

- Stream processing

- Website activity tracking

- Metrics collection and monitoring

- Log aggregation

- Real-time analytics

- Common Extensibility Platform support (CEP)

- Ingesting data into Spark

- Ingesting data into Hadoop

- Command Query Responsibility Segregation support (CQRS)

- Replay messages

- Error recovery

- Guaranteed distributed commit log for in-memory computing (microservices)

Closing Thoughts

Apache Kafka is a distributed streaming platform capable of handling trillions of events a day. Kafka provides low-latency, high-throughput, fault-tolerant publish and subscribe pipelines and is able to process streams of events.

Happy coding! To learn more about the implementation of Apache Kafka and to experience Kyle Pollack’s full Lightning Talk session, watch here.