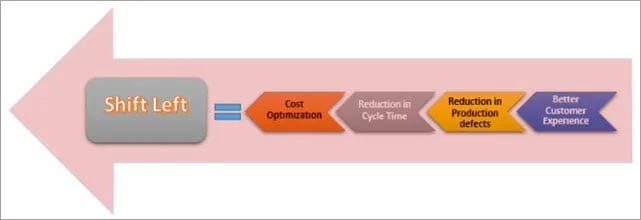

Shift Left Testing is a crucial, ongoing and ever-evolving trend in the software development space. It is, fundamentally, about pushing testing earlier in the software development life cycle, testing early and often, and improving a software product’s quality through better planning and procedure.

This all sounds nice on paper but it can be unclear what the practical steps are for shifting left. What does it mean to test earlier? What exactly is the problem with waiting until you have completed a milestone or deliverable to think about testing? How can you gain confidence in a product before you have an MVP or launch?

- Overview

- Defining Shift Left

- The Problem

- A Brief History

- Five Solutions

- Closing Thoughts

- The InRhythm Propel Summit And Our Core Values

Defining Shift Left

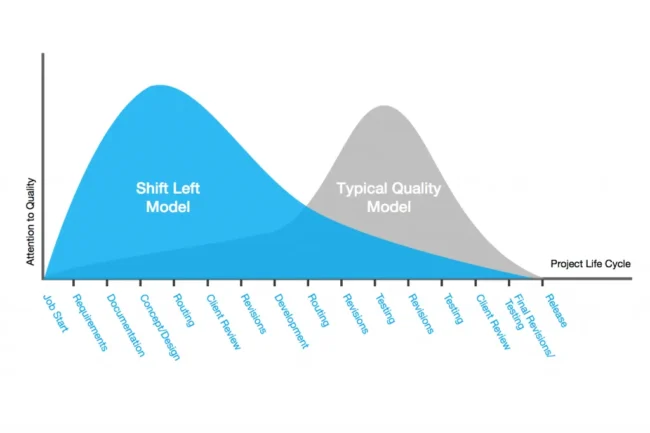

Shift Left Testing is an approach that involves considering, planning, and executing tests earlier in the software development life cycle.

In a typical product, you have a product design phase, followed by an architecture design phase, a development phase, testing, deployment, and maintenance. In an absolute worst case scenario, testing isn’t even considered until the testing stage, in the latter half of the pipeline.

Practically, most modern software companies are considering testing at least during coding, but we can push it even further. Why not at least think about testing as early as the design phase?

Why not write our tests at the same time as we write our requirements. Why not architect our systems not just based on cost and time, but testability? Why save all the Quality for the end of the SDLC, when we can think about Quality early.

The Problem

It is common knowledge that the closer a software project is to completion, the more expensive it is to change. Decades of wisdom have resulted in a multitude of solutions meant to address this: agile development, devops, and shift left included. The lesson from these is the adoption of rapid iteration, fast fail, and early planning that saves time and effort later.

This wisdom, of course, applies to testing and defect remediation as well. Let’s consider the worst case scenario described before. A waterfall style development project where the software is passed off to testers when the first MVP is completed.

In this scenario, QA is not even considered until the implementation is ready. Analysts and test engineers first need to spend time designing and developing a test plan for the software based on the implementation. In many cases, the first pass of a test plan is inadequate and lacks detail. Approaching testing with this late mindset also tends to result in underestimating the QA effort or the inability to allocate adequate resources.

Once a test plan has been put together, testing occurs and defects are found. These defects run into two major problems:

- First off, debugging defects gets more difficult the more mature the software is. As a software implementation matures, it becomes more complex and integrated. Engineers start to make assumptions that they take for granted, and it becomes more difficult to understand how things are integrated. Moreover, logs, tracer bullets, and other debugging tools that engineers utilize when implementing software may be disabled, depreciated, or abandoned as the implementation matures

- Second, defects are more expensive to remediate later in the development cycle. In addition to being more difficult to debug, engineers generally are tasked with critical planning and new feature development at the MVP stage. This product evolution is inevitably halted when engineers are forced to stop improving the product in order to go back and fix old defects. This results in a bottleneck at the QA stage, where the team must waste time examining, iterating on, and fixing old functionality

This is a worst case scenario but the problems propagate up even to well tuned agile development teams. There is a pervasive habit of waiting until a feature or story is code complete before “handing off” to QA. In addition, we can run into problems where design and architecture is not conducive to testing, and a reliance on writing tests as features are completed leads to a “catch up” mentality where testing is trying to keep pace with development.

Overall, we can break the problem down into a few points:

- Thinking about testing after implementation ultimately results in lower quality tests

- Thinking about testing after implementation tends to result in difficult to test architecture

- Debugging test failure is more difficult later in development

- Remediating bugs is more expensive later in development

- There tends to be not enough time allocated to QA, resulting in bottlenecking and missed milestones

A Brief History

Before addressing the problems, let’s consider a brief history of Shift Left testing. There are four widely accepted methods of Shifting Left: traditional shift left, incremental shift left, agile/devops, and model-based shift left.

- The Traditional and Incremental methods are about considering good Integration and Unit testing during the waterfall implementation phase. The shift left occurs because we think about testing one phase early, but these models are not really relevant in the modern software world. Most software projects nowadays are agile, and for good reason.

- Most modern software projects with good testing are following the Agile/Devops method. This is the same as the previous, but instead specifically represents agile projects. We consider integration and unit tests during each sprint’s implementation phase. This is a great place to be and a good start to shift left, but we can take it even further.

- Few projects follow the Model method. In the model method, we’re considering testing even before implementation, at the requirements and architecture phase. This is the final and ideal evolution of shift left, where QA is a part of the process from the beginning.

Good planning and collaboration leads to a smooth late project cycle, and ultimately a higher quality project.

Five Solutions

With the history out of the way, let’s consider five actionable steps you can take to help a project shift left and alleviate the previously described problems.

1: Maintain A Strong Testbed That Adheres To The Testing Pyramid

Nowadays everyone is familiar with the Testing Pyramid. As a brief reminder, it is the assertion that an effective test plan should focus its testing efforts on particular types of tests.

Ideally the test plan includes a large number of unit tests. These are the bottom of the pyramid. Unit tests are the fastest to and cheapest to run. They can live inside the component that it tests, and provide a good sanity check on that component’s health.

In the center, we have our Integration tests. These tests typically still focus on API or back-end functionality. They operate on two components at once, testing the “junction” point between them to ensure compatibility. These tests are generally more expensive than unit tests in terms of maintenance, run time, and debug time, but provide more coverage and software confidence.

Lastly, we have our E2E tests. These tests test the entire system, usually by mimicking user behavior at the UI level. These are your more expensive tests, taking the most time to run, maintain, and debug. However, they give you the most confidence in the application’s health.

What does this have to do with Shift Left? As previously stated, all model’s of Shifting Left involve implementing more unit and integration tests. These tests don’t rely on a software being fully implemented. They can be developed and executed in tandem with a project’s backend. So consider developing and maintaining a comprehensive suite of integration and unit tests.

2: Evaluate The Testability Of Features During The Design And Architecture Phase

The first thing any quality assurance professional does when assigned a story for QA is evaluate its testability. That is, how can I, based on the architecture of this feature or story– take concrete steps with measurable outcomes- to ensure that it fulfills its requirements.

When we evaluate testability after implementation, we sometimes run into situations where the feature cannot be tested, or cannot be tested extensively enough. However, if we consider testability before implementation, we can potentially identify weak points and remedy them before engineering is locked in.

We can do this in four ways: by ensuring test data is easy to set up, by ensuring tests can always validate their outcome, by anticipating environmental constraints, and by evaluating time dependencies during the design phase.

- First off, we should ensure test data is easy to set up, access, and maintain

We shouldn’t need to guess if our test data is valid. We should not need to rely on luck to find data. We should not have to give up on testing if good data is unavailable. We can anticipate flaky or complicated data and design the database to simplify it, make it easy to look up and change. We can architect data backups and scheduled synchronizations earlier to reduce the cost of data maintenance later.

- Another thing we can do is ensure tests can always directly validate their outcome

Some call this side-effect testing. Essentially the feature has no measurable outcome, but we can see that it’s working by testing another feature.

For example, we’re testing that when we POST a transaction to a bank account, it should appear on the ledger. However, due to some flaw in the design, you cannot directly access a ledger in the test environment. Therefore, the only way for us to check that the transaction was added is to look at the balance before and after the API call. We’re not checking to see that my POST is working directly, we’re checking a side effect.

This has several problems. For example it assumes that this ledger is only being operated on by this test. So if another change occurs at the same moment as the test, the before and after numbers will not match. So why not just make the transaction ledger viewable from the start?

- Additionally, we should anticipate our environmental constraints

Sometimes, it can be expensive to make things testable. Maybe we can’t afford the AWS costs of maintaining both a QA and a PLT test environments, or we only have enough licenses for a third-party tool to support prod and one environment.

If throwing more money at the problem isn’t a solution, we need to pre-emptively address these weaknesses in the design. Make it so that features that rely on these can be diverted, mocked, or stubbed. Maybe allow some form of data injection. We can architect these solutions to only work in non-prod environments in non-prod environments. Maybe allow one environment to serve multiple purposes depending on configuration.

- The last thing we should evaluate is time dependency

Time dependency is kind of inevitable, especially in financial systems. A classic example is waiting for data to be written or refreshed at the start or end of the business week.

For example, we have a lower environment ledger that only gets written to at the end of the business week. We want to test that we can perform a transaction, and that it will eventually propagate down to that lower environment. In this case, the business design of this feature makes it difficult to test, and we probably don’t want to change it, so let’s just create pathways to test it in the architecture itself. For example, we should be able to force the sync via an API call, or flag a transaction to be written immediately instead of on the schedule. Or both!

3: Build Tooling Into The Environment During The Engineering Phase

Evaluating testability is about planning, but we can actually sometimes take concrete steps to make our software more testable early. Aside from altering our design, we can actually build the tools and resources we need for testing into our software or environment. We can do this in three ways: first by identifying useful testing functionality and building them into services, by considering Swagger, and by building visibility into our architecture.

- First, We Can Identify Useful Testing Functionality For Your Services, And Build Them In As API Endpoints

For example, an API that flushes a service’s cache, Or one that generates new test data automatically. You can enable these debug only APIs on lower environments only and they can make test setup and teardown much easier.

- Second, We Can Consider Building Out Swagger Pages For APIs

Swagger pages expose easy testing to everyone on a development team. Shifting Left is a team-wide effort, and so exposing base testing functionality to the entire team is pivotal to help everyone get on board with testing.

It also doubles as an easy way to document your APIs and an easy way to expose those previously mentioned debug only APIs to the team.

- Third, We Should Build Logging And Visibility Into Our Architecture

As stated before, one significant problem with late testing is the difficulty of debugging. Sometimes logs are available, but oftentimes they are depreciated, not maintained, or not up to date. When we consider logging early in the architecture phase, we can think about leveraging certain tools, such as Elasticsearch, Splunk, or Datadog to provide visibility into our services. Or, if we are using a messaging service, something like AMQBrowser or Kadeck.

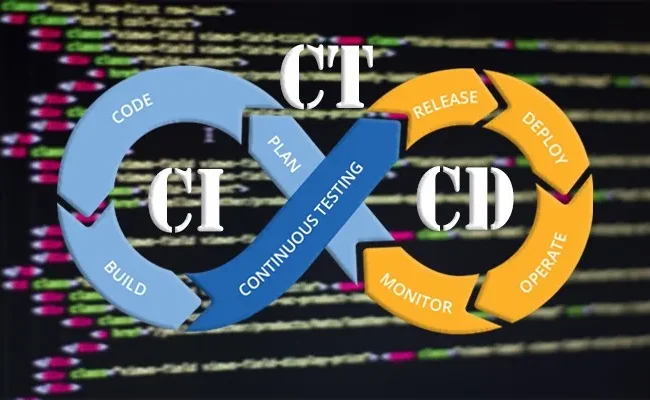

4: Leverage CI/CD To Build Testing Into Your Deployment Strategy

As previously stated, Shift Left Testing is not just about testing earlier, but it’s also about testing more often, usually integrating testing into our CICD pipelines. We can do this by implementing good test gates and by implementing test schedules.

- First Off, A Test Gate Is A Requirement In Your CI/CD That Depends On A Test Run To Either Proceed Or Error Out

For example, a push to remote is gated by Unit Tests. Some engineering teams even set it up where a developer can’t commit locally if the tests are failing. Next up, you can gate your merge to develop with integration tests. This can help prevent introducing regressions into the application caused by contract changes or difficult to track integration logic. E2E tests generally do not gate anything, but a high E2E test failure in a lower environment is a sign that the build is not prod ready.

This step synergizes with the first Shift Left strategy of maintaining a strong test best that adheres to the testing pyramid. A good testbed lets you build smart test gates that actually help to improve code quality and reduce regressions.

- Second, We Should Implement Regular Testing Schedules

Daily test runs are critical for not just observing environmental health, but also for obtaining critical historical data. It can be really useful to look at the history of a test, see 1000 runs where the last 300 failed, and know the exact timestamp tests began to fail. And daily test runs are just useful in general to gauge the applications health at different stages of development.

This can synergize with good test management tools, like TestRail or XRay.

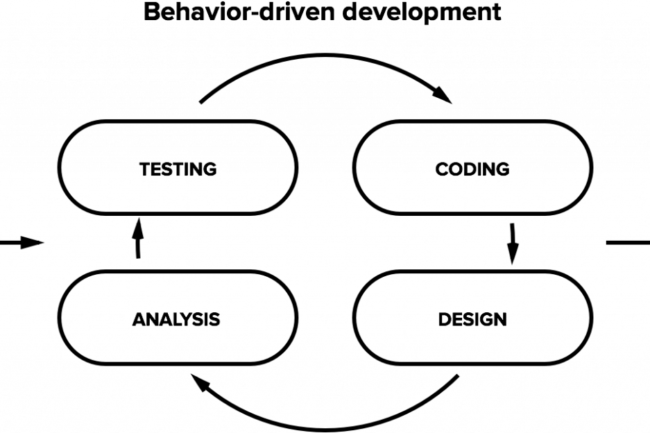

5: Consider BDD To Tie The Tests Directly To Your Story’s Requirements

The last shift left strategy we’ll talk about is using BDD to write tests into their stories as requirements. This one is key to elevating our Shift Left strategy from Agile/Devops to Method-based. With BDD, or behavior driven development, we can write and iterate on tests as early as the design phase, treat our tests as requirements, and involve the business in the quality process.

Behavior Driven Development, is an engineering methodology that involves writing tests in the Gherkin format before the feature is even developed. This format makes the test readable and writable in plain language with no dependency on system architecture. When written before the implementation, these tests will obviously fail, but as the feature is slowly completed, these tests should begin to pass one by one.

In the worst case scenario mentioned earlier, a story feature doesn’t get in front of the eyes of a tester until development is complete. The tester must then analyze and understand the feature to design tests for it. This is a potential bottleneck, and does not leave much room for iteration and improvement. When we write our tests in BDD format before engineering begins, we build an understanding of a feature early in the SDLC, and give ourselves ample time to iterate and expand on the test.

- The BDD Methodology Does Explicitly Treat Tests As Requirements, But As A Team We Can Do That As Well

We can consider a story only code ready when it has test requirements, and that story only dev complete when those test requirements are fulfilled. This provides concrete definitions of done for both the designer and the engineer that tie directly to testing.

- Lastly, The BDD Methodology Explicitly Involves The Business In The Quality Process

Nobody should know the product as well as the product owners and business analysts that design its features. So when we involve them in the quality process, we inevitably get higher quality tests faster. Product and business can be critical in identifying the core high value tests that provide the most confidence in a feature, and identifying particular weak points in a design from a business perspective.

Closing Thoughts

To review, Shift Left testing solves a myriad of problems with traditional linear software development, including bottlenecking, difficult to debug defects, and most importantly time wasted on bad tests, bad architecture, and bad code. Most organizations are somewhat there, with an Agile/Devops shift left strategy that has them implementing integration and unit tests as part of their agile sprints, but we can take it a step further.

We can build a strong testbed using Unit, Integration, and E2E tests. We can evaluate our tests during the requirements and architecture phase, we can build tooling and utilize third party tools to improve the testability and debuggability of our software during the implementation phase. We can use CI/CD to stabilize our product and monitor its health. And lastly, we can use BDD to begin writing our tests before we even begin implementations.

Shift Left Testing is a mature trend in software development and while we’ve made significant progress as an industry, there is still room for improvement. As we continue to consider Shift Left and implement these strategies, we’ll eventually attain stable, highly effective software corroborates developer efficiency and effectiveness.